I’m planning on building a salary calculator based on the data from played around with some of the numbers earlier. This time I’m planning on going a lot deeper.

I want your help and feedback! I want to know what would make a calculator most useful to you. Feel free to poke holes in my methodology and tell me how a real data scientist would handle this project.

In this post, I’m going to outline some initial findings as well how I’m planning to approach this project. All of the information below is based on a narrow subset:

- USA, full postal code

- DBA job

- Between $15,000 and $165,000

Regarding zip codes, some people only entered a portion of their zip for privacy sake. In the final analysis, I plan on taking into account the ~200 US individuals who did that.

Initial findings

The data isn’t very predictive

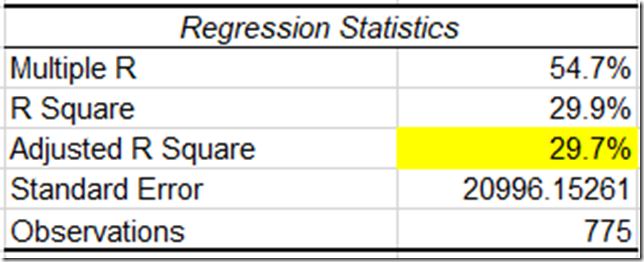

So I’m using something called a multiple linear regression to make a formula to predict your salary based on specific variables. Unfortunately, the highest Coefficient of Determination (or R2) I’ve been able to get is 0.37. Which means, as far as I understand it, that at most the model explains 37% of the variation.

Additionally the spread on the results isn’t great either. The standard deviation, a measure of spread, is about $25,000 on the original subset of data. Which means we’d expect 68% to be within +/- $25,000 of the average and 95% to be within +/- $50,000 of the average. So what happens when we apply our model?

When we apply the model we get something called residuals, which are basically the difference between what we predicted and what the actual salary was. The standard deviation on those residuals is $20,000. Which means that our confidence range is going to be +/- 20-40k. That to me doesn’t seem like a great range.

There are a few strong indicators

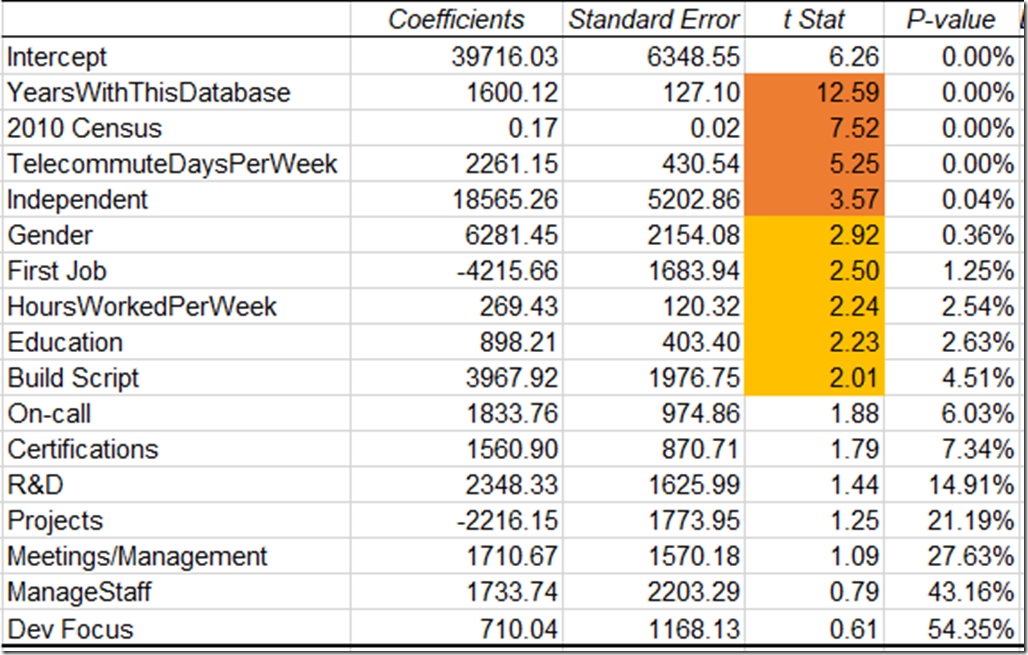

Let’s take a look at what we get when we do a multiple regression with the Excel Analysis ToolPak addin:

The two biggest factors by far seem to be how long you’ve worked and and where you live. In fact, we can explain 30% of the variance using those two variables:

The two other variables that are very strong are whether you telecommute and whether you are independent. When we add those, our adjusted R2 goes up to 33%.

Then after that we have a handful of variables that have a less than 5% chance of being erroneous:

- Gender. It’s still a bit early to jump to conclusions, but it looks like being female might cost you $6,000 per year. This is after controlling for years of experience, education, hours worked, and if this is your first job. Gender could still be tied to other factors like a gap in your career or if you negotiate pay raises.

- First Job. “First job” I identified as having identical values for years of experience and years in this job. If you haven’t changed jobs, it could be costing you $4,000, which lines up with my personal experience.

- Hours worked per week. This is basically what you would expect.

- Education. This is the number of years of education you received outside of high school.

- Build Scripts and automation. One of the tasks people could check was if they are automating their work. Out of all the tasks people could list, this seems to have the biggest impact.

There are some interesting correlations

Part of doing a multiple regression is making sure your variables aren’t too strongly correlated or “collinear”. As part of this, is possible to find some interesting correlations.

- If you are on-call, you are less likely to have post-secondary education. You are also probably overworked and learning PowerShell (no surprise there).

- Certifications correlate negatively with being a dev-dba instead of a production dba.

- If this is your first job, you are less likely to be working more than 40 hours per week. Maybe that $4,000 paycut is worth it

- Independents also work less hours per week. So maybe your second job should be going independent.

- If you telecommute, you might make $2,000 more per year for every day of the week you telecommute; but you are going to be working more hours as well.

Plans moving forward

So here is the current outline for this blog series:

- Identifying features (variables)

- Data cleanup

- Extracting features

- Removing collinear features

- Performing multiple regression

- Coding a calculator in Javascript

- Reimplementing everything in R

So let me know what you think. I plan on making all of the data and code freely available on github.

Leave a Reply