I often ask people with more experience than me “How do you stay relevant in our field?”. I joke that I live in perennial fear of being replaced someday, by a 22 year-old with no family commitments. Really, it’s a joke. Really, it’s a fear.

So, let’s talk about how to keep up with technology.

First, we must grieve.

So here’s the secret to keeping up with technology. You can’t.

There are too many technologies, too many features, too many updates:

- SQL Server 2017 is coming out this year.

- Big data technologies are so numerous as to be indistinguishable from Pokemon.

- PowerBI ships features on a weekly basis.

- Worst of all, you’ve got NoSQL which is literally the mathematical dual of SQL. It’s everything that SQL isn’t, by definition!

It’s all too much.

This realization is likely to be expressed in the 5 stages of grief.

- Denial. Other people can keep up, why can’t I? I’m learning tons of stuff all the time! This is easy.

- Anger. Why they heck are they making releases every month!? When did Microsoft go agile? I thought SQL Server versions every 2 years was bad enough.

- Bargaining. Okay, maybe if I stick to core SQL stuff, I’ll be fine. Hadoop seems like a fad. And Azure is never going to be popular with my company.

- Depression. This is impossible. I’m going to lose my job when I’m 40 years old and arthritis starts kicking in.

- Acceptance. There’s more happening than I can ever learn, but Hacker News doesn’t define my success as an IT professional.

This post isn’t about doing the impossible. It’s about making the most of your resources. That’s something that you can do.

Are you asking the right questions?

I would like to propose that it’s not about keep up with technology at all. This is a second-order goal, a proxy of sorts. Really, there are two questions at the heart of things:

- How do I keep my job?

- How do I keep my friends?

This is why technology causes so much angst. We want to learn enough that we can put food on the table; and we want to do it in short enough time that it doesn’t destroy our personal lives.

Next, we must think.

In order to get ahold of the the problem, let’s use some analogies: investments and radioactive decay.

How learning is like investing

Do you have a retirement account? If so, do you invest primarily in stocks or bonds? Why?

If you are at all young, the you invest primarily in stocks. That’s because stocks have a higher rate of growth than bond. You are trying to outrun inflation, where the value of your money is steadily decreasing. This is like your current knowledge becoming outdated. All that vb6 coding knowledge is like a pile of cash in your mattress. When I was a kid, $20 was a lot of money.

The next question is, do you invest in just one stock, like Apple? No, you diversify your portfolio. High growth stocks go up, on average. Some however, tank. High growth means high volatility. A good example is Apache Flex. It used to be a really promising application platform, until Steve Jobs killed Flash.

So we’ve got two risks to our learning portfolio: Losing value in our existing knowledge, and making the wrong choices for our new knowledge. These different risks have different mitigation strategies.

Specialization and generalization

These comparisons to bonds and stocks relates to the challenges of specialization versus generalization. We specializes to pay the bills. We generalize to keep our jobs.

Specialization is how we get paid. The reason anyone pays us is because we have skills or knowledge that is not quickly acquired. The reason consultants like David Klee make gobs of money is that they have taken a subject, like virtualizing SQL, and have gotten really good at it. To get paid more than minimum wage you are going to need some level of specialization.

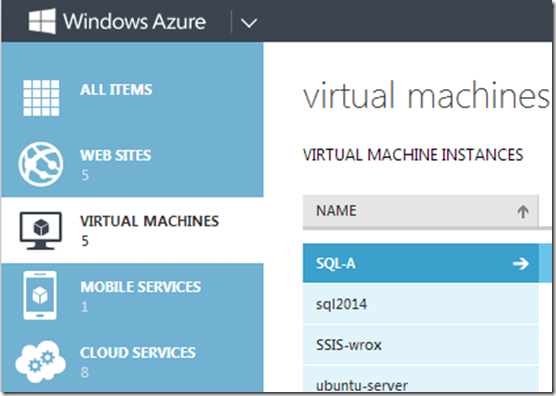

Generalization is how we get paid in 10 years. You need to be specialized to get paid right now, it’s a short term investment. However, that investment decays, just like the value of money in your mattress. To get paid a decade from now, you need to broaden your horizons. If you are a data person, it might mean learning R and python. It might mean learning Azure. Heck, it might mean PowerShell and Docker.

The tension here is that you need both. You need paid now AND in 10 years. Kevin Feasel talks about trying to find that right balance. I’m not here to tell you what that balance is. What’s important is that each has different constraints. Specialization requires focus. Generalization requires time. More on that later.

How learning is like radioactive decay

Let’s take another approach.

Our knowledge has a limited shelf-life. Allen White said, in the SQL Data Partners podcast, that you have to retool yourself every 5 years. In college, I remember joking that half of what you knew would be useless in 5 years.

Well, what if we took that literally? How would we model that? How would we think about that?

In nuclear physics, there is the idea of a half-life. You have radioactive material that decays in half every X units of time. Why not apply this concept to IT? The half-life in this example then would be 5 years.

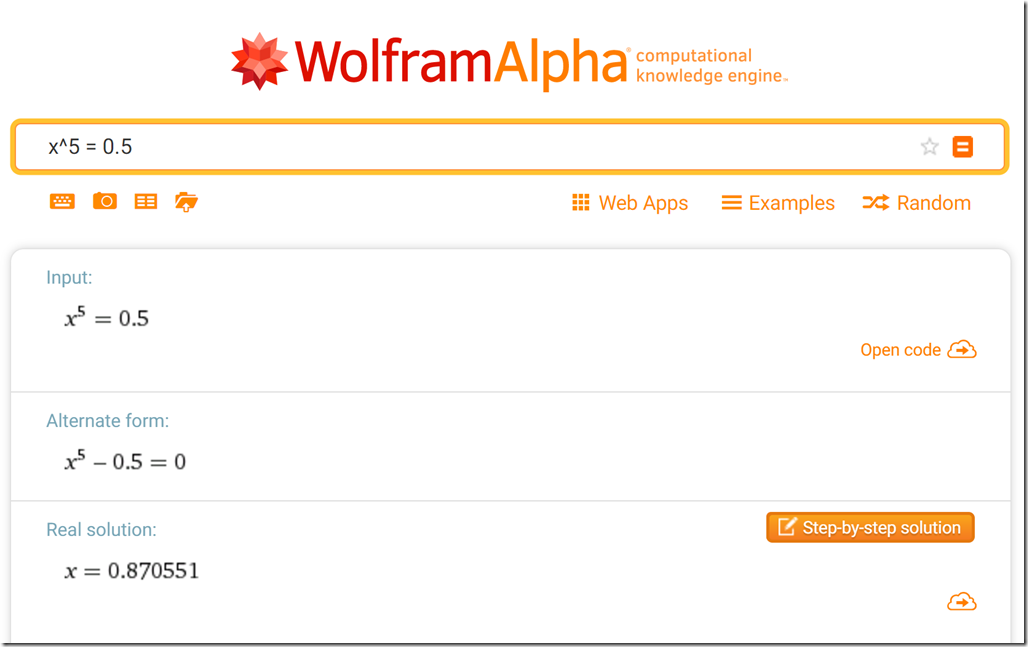

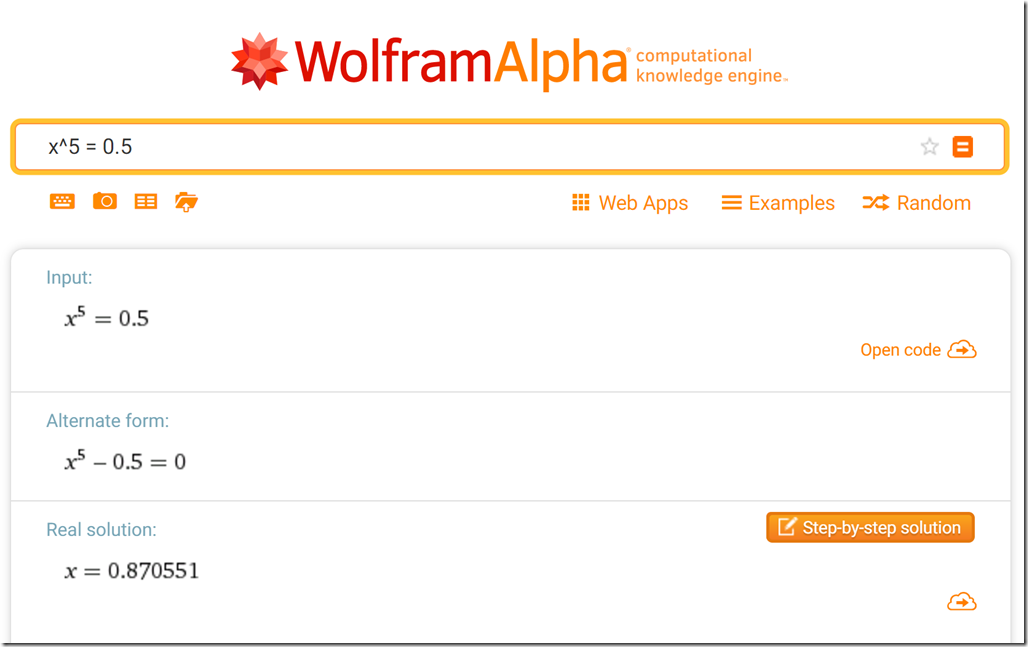

So the next question is this: if half of what you learn is either irrelevant or forgotten in 5 years, how much do you need to learn in a given year, to keep steady? Let’s ask our friends at Wolphram Alpha.

If x is our rate of decay and our half-life is 5 years, we can work backwards from that and solve for x. In this case, x equals 87%.

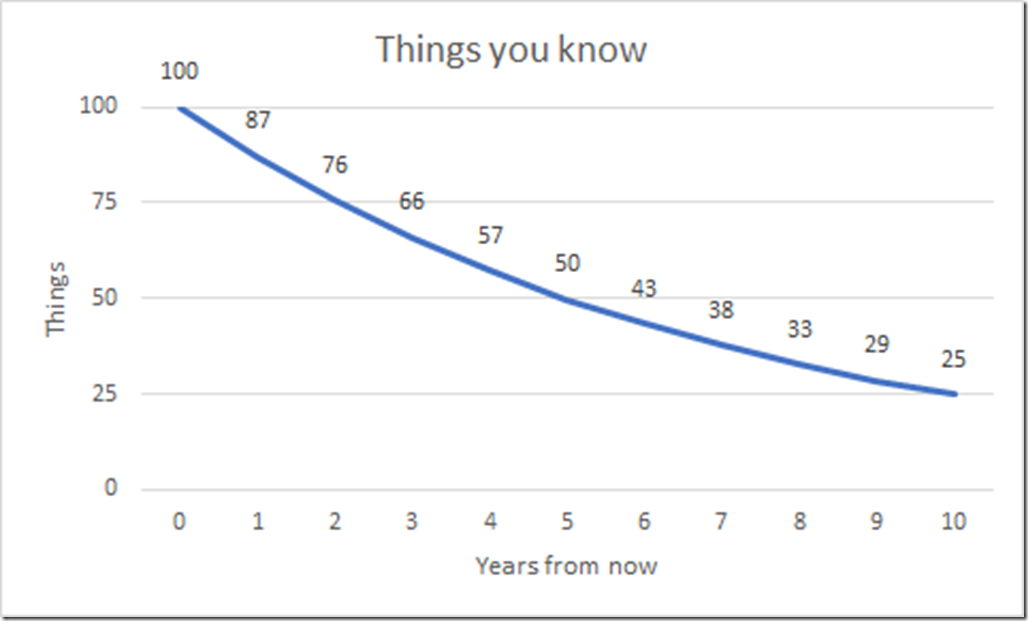

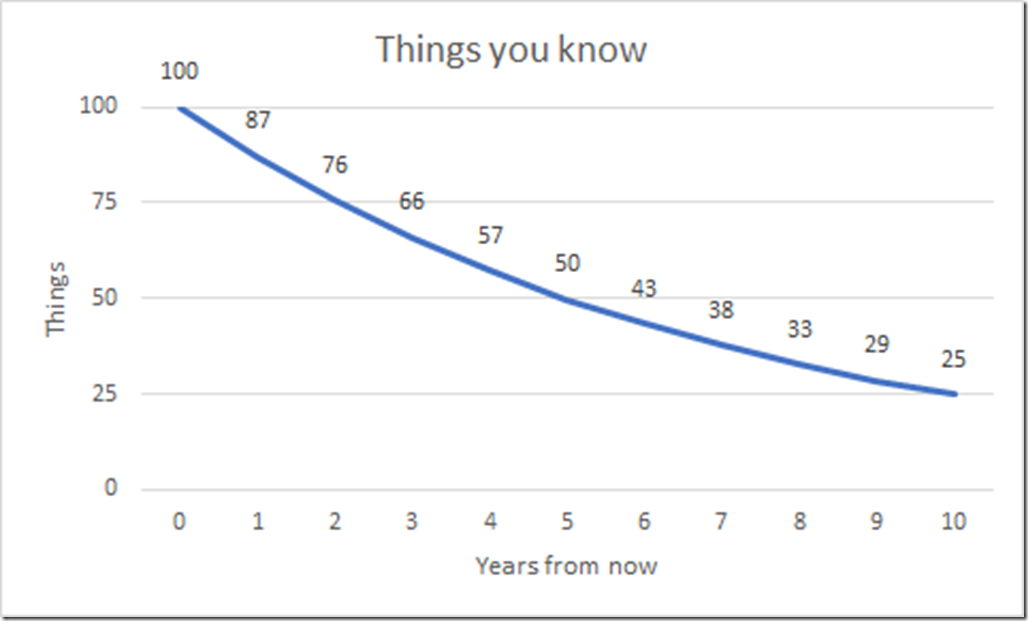

So, what that means is that if today you know 100 relevant things, then a year from now you’ll only know 87 relevant things. That’s like going from a solid A+ to a weak B+. You just lost a whole grade! Not good.

Below is a curve showing what this model looks like over 10 years.

Changing the math

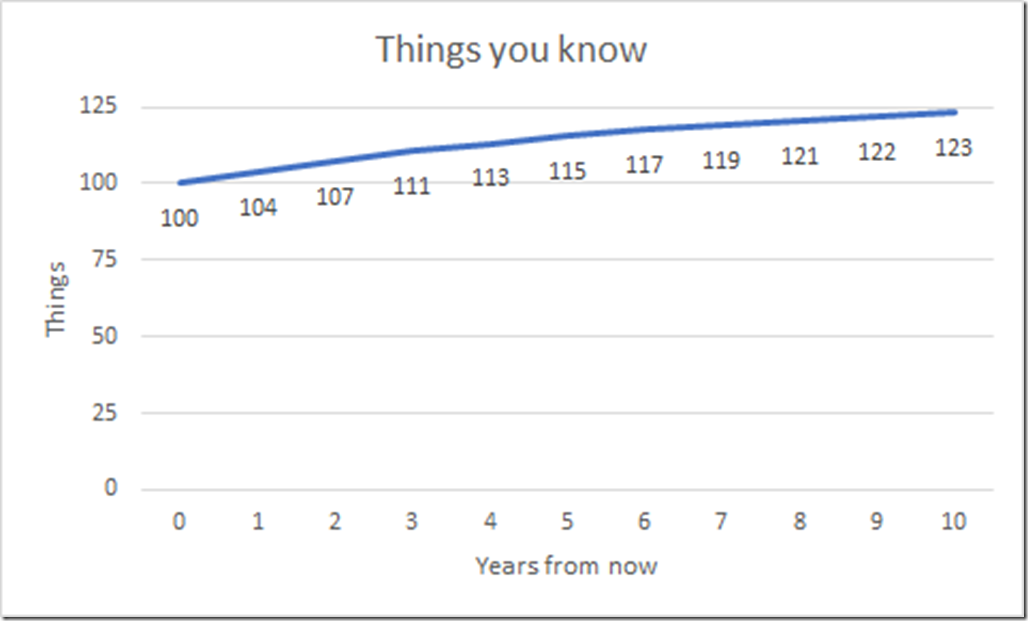

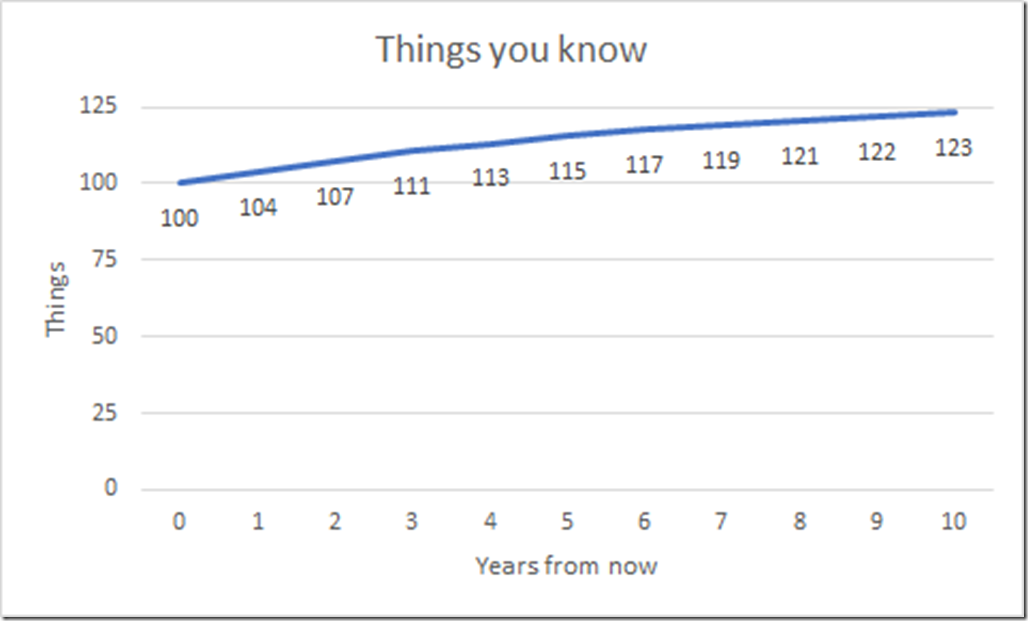

Well, this isn’t great. What if I want to know 120 things? How can we do that? One option is to learn more things.

Learn more things

If normally we have to learn 13 things each year, then we have to learn another 20 things on top of that. Or, if you are patient, you can learn an extra 4 things each year, and eventually you will get there.

Slow the decay

Another option is to change the rate of decay. Instead of learning more things each year, what if we didn’t have as much decay? If we can slow that rate of decay just a little bit, to a half-life a 6 years instead of 5 years, we’ll have the same effect. That means that instead of brute-forcing things, we might be able to be smarter.

What does this all mean?

So that gives a path forward. We need to either

- Increase the number of relevant things you can learn each year

- Or, decrease the rate of decay for relevant knowledge

These are the only three knobs we have. Either learn more stuff, learn the right stuff, or learn stuff that lasts longer. Let’s investigate all three.

How do we fix this?

Learning more things

So one solution to our dilemma is to brute force it. Let’s just learn more things and hope that they are the right ones. To do that, we can look at our inputs and constraints.

What are the constraints that affect our learning? There are 3 big ones I can think of:

- Time

- Energy

- Money

If we can increase any one of these, then we might be able to increase how much we can learn in a week.

Time

So, lets say we decide to go the simple route and go for volume. You’ve got a 168 hours in a week. You can’t make any more hours, and if you try to use all of them in a week, you are going to need some amphetamines.

So, if we can’t create more hours, how can we make more time? One option would be to cut out other activities. If you are willing to quit watching TV or playing video games, that frees up more time for learning. You could use a service like toggl.com to do a time audit and see where all of your time goes.

There is a limit to going down that path, however. You need to sleep. You need to have a social life. You need to have some fun. Like we said, you want to keep your job and keep your friends.

Multitasking

Another option is to multi-task. There is a lot of learning you can do that while doing other things. Things like podcasts are more about exposure than mastery. You can still get value out of them, even while mildly distracted. There are a number of times you could listen to a podcast:

- Commuting

- Exercising

- Washing dishes

This is a way to take back time you are spending on other things without giving up all your free time.

5 minute learning

Another way of reusing your time is taking advantage of those weird breaks in time. Those breaks that are too small to get anything meaningful done. The 15 minutes at the doctor’s office. The 5 minutes waiting for a meeting.

Feed readers are a great way to take advantage of these weird units of time. With Feedly, I’m able to to read a blog article during the 5 minutes I’m waiting. This is way more useful than playing candy crush or reading twitter.

Focus/energy

Okay, so let’s say you’ve managed to find more time in the day. Unfortunately, not all learning takes the same amount of energy. Reading Twitter is mindless. Configuring a homelab requires focus. Listening to a podcast is mindless. Making a presentation takes focus.

One way of getting more focus is to prioritize the harder learning for when you naturally have focus. For some people this is early morning. For many people this is the weekend. I know that after a long day of work, I’m wiped out.

Part of that is learning to take care of yourself. Eat healthy. Exercise. Get sleep. Focus is so easy to destroy by poor lifestyle.

Finally, if you schedule time consistently and push everything out, you can have more focus. I also find having a separate office/learning space helps with this too. Cut out the distractions. Install StayFocusd for Chrome to block timewasters.

Money

If you are anything like me, money is your most plentiful resource of all 3. When I was kid, I had no money at all, but tons of time. When I was in college, I had pretty much no money, and a good bit less time. Now that I have been working for a while, that equation has flipped. I’ve got plenty of money, but no time to use it.

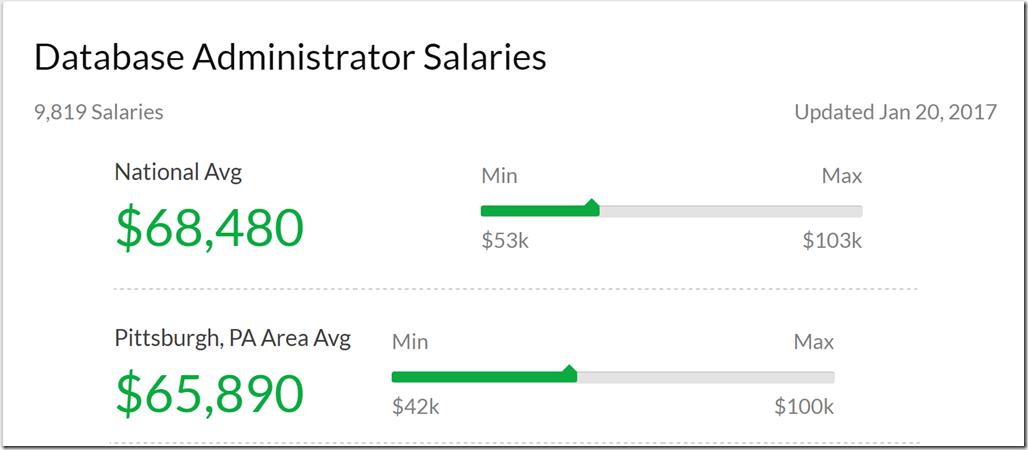

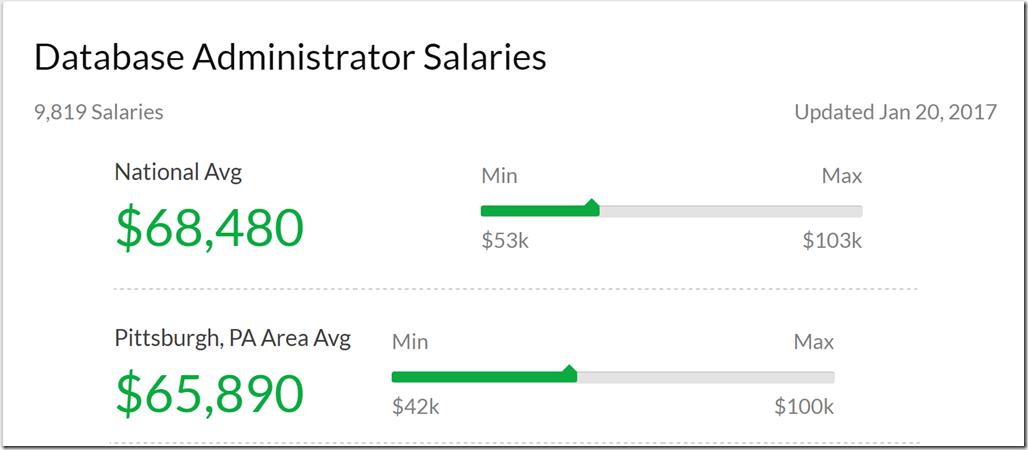

If you are an average DBA, you make plenty of money. According to the Bureau of Labor Statistics, the median salary for DBA’s is $85,000. The median for all occupations is $37,000. Think about that, that’s like 2.5x as much.

Now you may say “Hey, I don’t live in San Francisco.” or “Hey, I just got started.” Let’s take those factors into account. The market here in Pittsburgh is terrible, salary-wise. Even if you are just getting started in the field, you are probably making at least 40K.

Let’s do a little math on this. If you make 40K per year, you make roughly $20 per hour. that means your time is worth $20 per hour. If you can spend $5-10 to save an hour of your time, do it.

If a $50 book saves your 5 hours of Googling, buy it. If a Pluralsight subscription saves you 30 hours per year of frustration, buy the subscription. Don’t be afraid to spend money to save yourself time and energy.

Consider budgeting your money

If you don’t feel like you have a lot of money, I would deeply urge you to start budgeting. I used to look at my checking account to see if I had money. Then if there was money there, I would spend it. This would work until my car insurance bill would come in, and suddenly I needed $600. And just as suddenly, I didn’t have nearly as much money as I thought

Get a budgeting program. There’s some free one’s out there, but I use You Need a Budget.

Learning the right things

So we covered how to learn more things. An alternative is to learn the right things. If you learn the right thing, you’ve have less wasted effort.

Lean on curation

Sturgeon’s law says that 90% of anything is crap. That’s certainly true of learning materials. Not only is there a lot of bad training out there, but there’s a lot of content in general that just isn’t relevant. It’s cool that someone wrote an OS in Rust, but it’s probably not relevant to your job in any significant way.

If you are time poor or focus poor, you need a gatekeeper so the flood of possibilities doesn’t overwhelm you. This means depending on curation.

That curator could be you, first off. One of the reason I suggest a feed reader over Twitter is that you are able to heavily curate the content that hits your eyes. You are the one picking the blogs to read instead of the pachinko ball machine of social media and fate. You are the one deciding what’s relevant to you.

Another options is to depend on professionals in the field that you trust. Find people to follow that consistently have good material or recommendations.

The third option is to pay money. Sure, there’s some paid crap out there. But general it does act as a quality filter. Things like written books and video libraries are far less likely to be total crap, because those things have editors and investors and such. Someone was being paid to make sure it was worth making. Additionally, the author worked hard to condense the knowledge into a concise format. Be willing to pay money.

Having a plan

Okay, so now you are investing your time and energy into quality materials, but are you learning the right things? Are you learning the things that are right for you? You need to have a career plan. You need 1 and 3 year goals. Otherwise your career plan will be a spread out mess.

Figure out where you want to focus, figure out where you want to go. Pick a specialization. More of what you learn will be relevant if you know what that is.

Learning things that last longer

Okay, so we are learning more things and more of the right things. But we still have the problem that our knowledge is decaying whether from atrophy or irrelevance. This is the last step in the funnel and the last thing we can optimize.

Improving retention

One way to keep ahold of knowledge for longer is to lean on active learning. If something goes in through your eyes and ears and back out your fingers and mouth, you will retain it for longer.

If you want to specialize and go deeper, you need to do things like blogging, presenting,coding, etc. Reading isn’t enough. Writing isn’t enough. if you truly want to learn, you have to do.

Reddit and hacker news are good for exposure to a topic, but exposure fades quickly. Use exposure to get a lay of the land, then go deep once you find the right things to learn. If you focus on mastery, you are going to learn things in a way that last longer.

Avoiding planned obsolescence

Another issue is that certain areas of knowledge just have a faster rate of decay. Some of these are facts tied to a specific version of a technology. Another thing are unproven technologies that might turn out to just be fads. Big data is still in this phase, where there are so many technologies that will be gone forever in 5 years.

People change far more slowly than technologies, so skills relating to them last far longer. Soft skills never go out of style. Communications skills never go out of style. If you learn how to write an abstract today, that knowledge will still be relevant 40 years from now.

The fundamentals last longer too. The core basics of computer science are far more establish than this weeks JS framework. If you have solid underpinnings, you can take your knowledge of C++ to Go, for example. If you understand how a ACID and CAP theorem, The new consistency models of Cosmos DB will make sense. Under understanding of one layer beneath will allow you to jump to new technologies far quicker.

Putting it all together

To summarize what we talked about:

- Take advantage of multitasking to get back more time. Listen to podcasts while you drive or exercise.

- Invest in a pod catcher. I use itunes and an iPod nano.

- Invest in a feed reader. I use Feedly.

- Take care of yourself, physically. Diet, sleep and exercise will all improve focus.

- Schedule time to maintain focus. Treat focus as your most precious resource. Don’t browse twitter when you are firing on all cylinders.

- Be willing to spend money on curation. There is a good chance that you have more money than time or focus. Be willing to spend money to avoid wasting time.

- Understand exposure versus mastery. Spend your time on exposure and your focus on mastery.

- Pick a specialization. Have a plan on what technologies you need to go deep on. You won’t get there by accident.

- Learn things that last. Learn the fundamentals. Learn internals. Learn people skills. Do home labs.